How to assess knowledge?

As a medical teacher, equipping your students with robust knowledge is important. But how do you truly gauge their understanding, application, and synthesis of this crucial information?

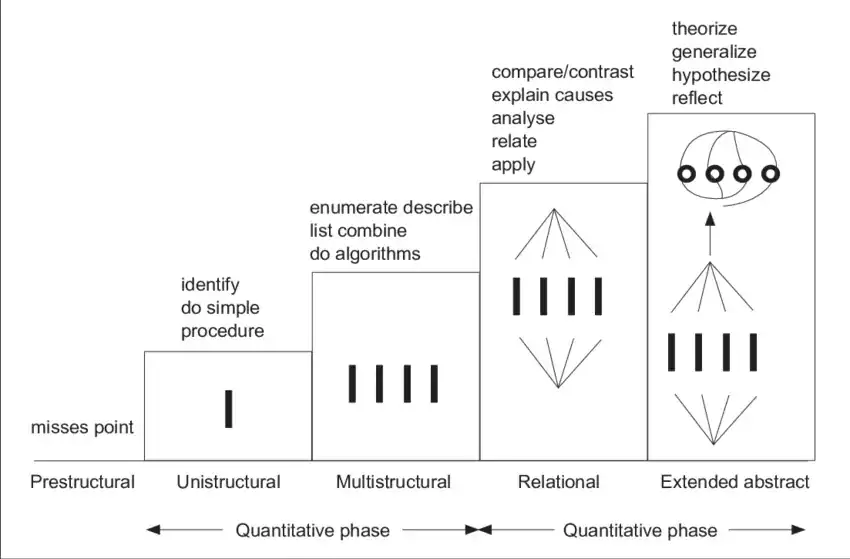

Knowledge and SOLO taxonomy

"Knowledge" in medical education is more than just memorizing facts. It's a complex combination of understanding, application, and critical thinking. Assessing this multi-faceted skill requires robust tools, and here at KI we use mainly the SOLO taxonomy as a valuable framework for medical educators.

Different assessment methods

Summative assessment of student knowledge in medical education involves evaluating students' understanding and retention of theoretical concepts and principles at the end of a learning period. Here are different methods for summative assessment of student knowledge, excluding practical and clinical skills. We also added a published paper from KI about each method:

- Multiple-Choice Exams: These test recall, comprehension, and application of knowledge. They’re efficient for large classes and provide objective scoring.

- Essays: These assess students’ understanding, reasoning, and writing skills. They allow for creativity and individual expression.

- Oral Exams: These evaluate students’ ability to articulate knowledge and think on their feet. They can be structured or unstructured.

- Short Answer Questions: These test students’ ability to succinctly express knowledge and understanding. They’re easier to grade than essays.

Case Studies: These assess students’ ability to apply theoretical knowledge to real-world scenarios. They promote critical thinking and problem-solving skills.

Courteille, Olivier & Fahlstedt, Madelen & Ho, Johnson & Hedman, Leif & Fors, Uno & Holst, Hans & Felländer-Tsai, Li & Möller, Hans. (2018). Learning through a virtual patient vs. recorded lecture: a comparison of knowledge retention in a trauma case. International Journal of Medical Education. 9. 86-92. 10.5116/ijme.5aa3.ccf2

- sdv

Each method has its strengths and weaknesses, and the choice depends on the learning objectives and the nature of the subject matter. Remember, a mix of methods often provides a more comprehensive assessment of student learning.

Optimizing Your Assessments: A Utility-Based Approach

Beyond score reports, true assessment quality lies in its utility. As a medical teacher, utilize Van der Vleuten and Schuwirth's (2005) formula to ensure your summative knowledge assessments are effective:

Utility = Reliability * Validity * Feasibility * Educational Impact * Cost-Effectiveness

1. Reliability (Consistency): Would different instructors give the same score for the same performance? Use clear rubrics, calibrated scoring, and peer review to minimize subjective interpretations.

2. Validity (Relevance): Does the assessment truly measure what students learned? Align assessments with learning objectives, avoid ambiguity, and use diverse methods (written, and oral) to capture different knowledge aspects.

3. Feasibility (Practicality): Can the assessment be administered and graded efficiently? Consider available resources, student workload, and technology integration for smooth implementation.

4. Educational Impact (Learning): Does the assessment promote learning beyond just getting a grade? Provide timely, specific feedback, encourage self-assessment, and design tasks that encourage higher-order thinking.

5. Cost-Effectiveness (Resource Optimization): Is the assessment efficient in terms of time, effort, and cost? Explore digital tools, collaborative grading, and cost-effective development procedures.

By continuously evaluating each factor and making adjustments, you can ensure your assessments are not just reliable and valid, but also truly impactful and cost-effective, optimizing the learning experience for your medical students.

“A final piece of advice would be the suggestion . . . to look for the possibility of cooperation with other departments or faculties since the production of high-quality test material can be tedious and expensive.”

(Schuwirth et al 1999)

References and related material

- Amir H. Sam, Rebecca Wilson, Rachel Westacott, Mark Gurnell, Colin Melville & Celia A. Brown (2021) Thinking differently – Students’ cognitive processes when answering two different formats of written question, Medical Teacher, 43:11, 1278-1285, DOI: 10.1080/0142159X.2021.1935831

- Hauer, K. E., Boscardin, C., Brenner, J. M., van Schaik, S. M., & Papp, K. K. (2019). Twelve tips for assessing medical knowledge with open-ended questions: Designing constructed response examinations in medical education. Medical Teacher, 42(8), 880-885. https://doi.org/10.1080/0142159x.2019.1629404

- Amir H. Sam, Rebecca Wilson, Rachel Westacott, Mark Gurnell, Colin Melville & Celia A. Brown (2021) Thinking differently – Students’ cognitive processes when answering two different formats of written question, Medical Teacher, 43:11, 1278-1285, DOI: 10.1080/0142159X.2021.1935831

- NBME® ITEM-WRITING GUIDE. Download the guide and learn how to write questions that more effectively assess your students' knowledge: https://www.nbme.org/item-writing-guide