How to assess clinical skills?

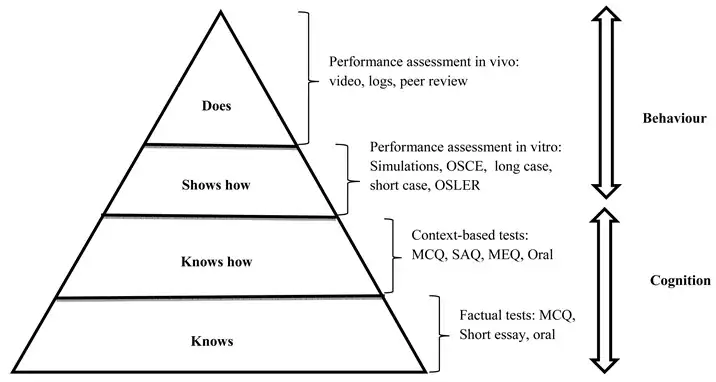

Beyond written tests, medical education demands assessing students' practical abilities. Assessing clinical skills isn't one-size-fits-all. It depends on what you're looking at (history taking, diagnosis, etc.) and why (student learning, job evaluation). Just like fitting a key to a lock, the assessment method should match the specific skill and goal.

How does a student learn a practical or clinical skill?

Simpson's taxonomy of psychomotor skill development provides a framework for understanding the progression of motor skills acquisition. It consists of seven stages, ranging from simple to complex skills. Here are examples of each stage of psychomotor skill development for a medical student:

1. Perception: Recognizing stimuli and cues relevant to the task.

Example: Identifying anatomical structures in a cadaver during dissection.

2. Set: Readying oneself for action, mentally preparing to perform a skill.

Example: Mentally visualize the steps of a clinical procedure, such as placing an IV line, before actually performing it.

3. Guided Response: Following instructions and demonstrating the skill under guidance.

Example: Practicing physical examination techniques under the supervision of a clinical preceptor, such as performing a neurological assessment.

4. Mechanism: Performing the skill with increasing proficiency and accuracy.

Example: Mastering the technique of suturing through repetitive practice on simulation models or animal tissue.

5. Complex Overt Response: Executing the skill with coordination and efficiency.

Example: Performing a complete physical examination on a patient, including inspection, palpation, percussion, and auscultation, systematically and thoroughly.

6. Adaptation: Modifying the skill based on changing circumstances or unexpected events.

Example: Adjusting the approach to intubation based on the patient's airway anatomy or response to initial attempts.

7. Origination: Creating new variations or applications of the skill.

Example: Innovating new surgical techniques or approaches based on research findings or clinical experience, such as developing a novel minimally invasive procedure.

These examples illustrate how medical students progress from basic perceptual abilities to advanced motor skills mastery for their training, following Simpson's taxonomy of psychomotor skill development.

Different methods

Here is a list of common assessment methods for assessing students' practical and clinical skills in health profession education.

- Objective Structured Clinical Examinations (OSCEs): These are stations where students perform specific tasks under observation.

- Direct Observation of Procedural Skills (DOPS): An assessor observes students performing a procedure in a real clinical setting.

- Mini-Clinical Evaluation Exercise (Mini-CEX): This involves direct observation and feedback of a clinical encounter.

- Portfolios: These are collections of evidence of a student’s competence over time.

Ensuring Quality in Clinical Skills Assessments

Here again we follow Van der Vleuten and Schuwirth's (2005) utility formula as a framework to optimize assessments:

Utility = Reliability * Validity * Feasibility * Educational Impact * Cost-Effectiveness

1. Reliability (Consistency): Can different examiners objectively assess the same skill? Utilize standardized rubrics, calibrated scoring, and inter-rater reliability checks to minimize subjectivity.

2. Validity (Relevance): Do assessments truly measure the intended skills? Align assessments with learning objectives, ensuring tasks reflect real-world clinical scenarios and competencies.

3. Feasibility (Practicality): Are assessments efficient and adaptable to your resources? Consider simulation tools, standardized patients, and technology integration for smooth implementation.

4. Educational Impact (Learning): Do assessments promote skill development beyond just passing? Provide detailed feedback, encourage self-reflection, and incorporate formative elements to guide improvement.

5. Cost-Effectiveness (Resource Optimization): Are assessments economical in terms of time, effort, and cost? Explore resource-sharing, collaborative assessment, and cost-effective simulation solutions.

By continuously evaluating each factor and striving for improvement, you can ensure your clinical skills assessments are not just reliable and valid, but also truly impactful and cost-effective, preparing your students for confident practice in the real world.

Examples within Karolinska Institutet

| Method | Published paper |

|---|---|

| Objective Structured Clinical Examinations (OSCEs) | Hultgren C, Lindkvist A, Curbo S, Heverin M. Students’ performance of and perspective on an objective structured practical examination for the assessment of preclinical and practical skills in biomedical laboratory science students in Sweden: a 5-year longitudinal study. J Educ Eval Health Prof. 2023;20:13. doi: 10.3352/jeehp.2023.20.13 |

| Portfolios | Annette Heijne, Birgitta Nordgren, Maria Hagströmer & Cecilia Fridén (2012) Assessment by portfolio in a physiotherapy programme, Advances in Physiotherapy, 14:1, 38-46, DOI: 10.3109/14038196.2012.661458 |

“There has been concern that trainees are seldom observed, assessed, and given feedback during their workplace-based education. This has led to an increasing interest in a variety of formative assessment methods that require observation and offer the opportunity for feedback.” (Norcini & Burch 2007)

Professor John Norcini, recipient of Karolinska Institutet's prize for research in medical education 2014 and President and CEO of the Foundation for Advancement of International Medical Education and Research (FAIMER).

References and related material

- Daniels, V. J., & Pugh, D. (2017). Twelve tips for developing an OSCE that measures what you want. Medical Teacher, 40(12), 1208-1213. https://doi.org/10.1080/0142159x.2017.1390214

- Norcini, J., & Burch, V. (2007). Workplace-based assessment as an educational tool: AMEE Guide No. 31. Medical Teacher, 29(9-10), 855-871. https://doi.org/10.1080/01421590701775453

- Pell, G., Fuller, R., Homer, M., & Roberts, T. (2010). How to measure the quality of the OSCE: A review of metrics – AMEE guide no. 49. Medical Teacher, 32(10), 802-811. https://doi.org/10.3109/0142159x.2010.507716

- Khan, K. Z., Gaunt, K., Ramachandran, S., & Pushkar, P. (2013). The Objective Structured Clinical Examination (OSCE): AMEE Guide No. 81. Part II: Organisation & Administration. Medical Teacher, 35(9), e1447-e1463. https://doi.org/10.3109/0142159x.2013.818635